Orofacial cleft is a birth defect that results in tissues of the face, mouth, or lip to not be fused together correctly. At a very young age, these children undergo surgical procedures, and will need long-term speech therapy. SpokeIt is an interdisciplinary project created at UC Santa Cruz’s computational media department and psychology department in conjunction with the medical team at UC Davis. SpokeIt is a game created with the goal of making practicing speech fun and effective. SpokeIt benefits children with Cleft speech because it makes practice seamless, gives speech therapists access to their patient’s progress, and assigns words and phrases that are suitable for the child to exercise. SpokeIt employs a dynamic curriculum that adjusts its difficulty as the child plays.

Researchers: Jared Duval, Zak Rubin, Sri Kurniawan

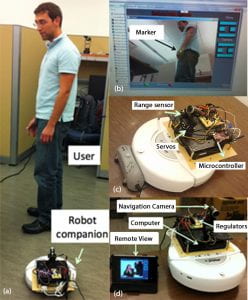

This LASSIE robot is designed to be a monitoring assistive living robot, which is low cost and can be steered over the Internet by family member to remotely monitor and help assess the well-being of an older relative living alone. A commercially available iRobot Create platform was used as a starting point for the Assistive Living Robot, however the system could potentially be used on another robotic base. Our system (i) takes advantage of commercially available systems to reduce development cost and effort, (ii) acts as a video and audio communication tool between older persons and their family members or caregivers and (iii) can analyze the video feed to detect heart rate and breathing rate. The proposed system could be integrated with in-home monitoring sensors (Smart Grid, Professor Patrick Mantey), which could be the trigger for alerting family members that an out of ordinary event is occurring, therefore, our robot could be woken up and sent to be used as over the Internet watchful eye.

This LASSIE robot is designed to be a monitoring assistive living robot, which is low cost and can be steered over the Internet by family member to remotely monitor and help assess the well-being of an older relative living alone. A commercially available iRobot Create platform was used as a starting point for the Assistive Living Robot, however the system could potentially be used on another robotic base. Our system (i) takes advantage of commercially available systems to reduce development cost and effort, (ii) acts as a video and audio communication tool between older persons and their family members or caregivers and (iii) can analyze the video feed to detect heart rate and breathing rate. The proposed system could be integrated with in-home monitoring sensors (Smart Grid, Professor Patrick Mantey), which could be the trigger for alerting family members that an out of ordinary event is occurring, therefore, our robot could be woken up and sent to be used as over the Internet watchful eye.